Dmitry Laptev

Personal homepage

- Industry projects:

Google search quality

I am part of Google Core Ranking team, whose goal is to develop algorithmic changes to Google Search. We focus on letting users easily access higher quality and more authoritative content. Cannot tell much more than that :)

My PhD thesis

This is a cumulative work of my four years in ETH Zurich. The common umbrella topic is the development of expert-aware algorithms in computer vision - the algorithms that incorporate strong prior knowledge from field experts into modern algorithmic pipelines. Specific topics covered in the thesis are mentioned in more details below.

- "Towards expert-aware computer vision algorithms in medical imaging". Text and presentation.

Transformation-invariance

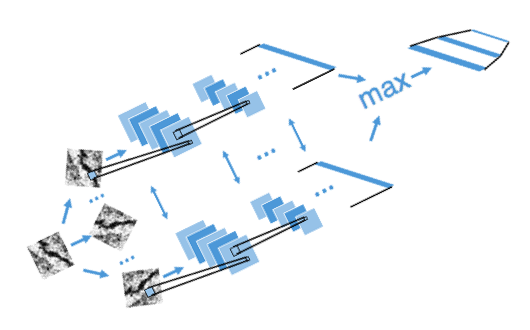

Transformation-invariance is crucial for many computer vision applications. It makes algorithms robust to nuisance variations in the data and makes the learning process more efficient. We developed two novel algorithms to combine the flexibility of general-purpose algorithms (CNNs and Convolutional Decision Jungles) with theoretically guaranteed transformation-invariance.

- "TI-pooling: transformation-invariant pooling for feature learning in Convolutional Neural Networks" D. Laptev, N. Savinov, J.M. Buhmann, M. Pollefeys, CVPR 2016. Paper, poster and code for TensorFlow and Torch7.

- "Transformation-Invariant Convolutional Jungles" D. Laptev, J.M. Buhmann, CVPR 2015. Paper and poster.

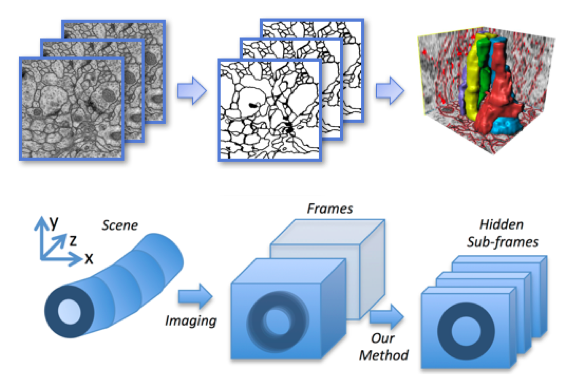

Anisotropic data analysis

Anisotropic data set is a collection of sequential images representing a continuous evolution of structures, in which the resolution across one dimension of the stack is much lower than the resolution of the other two dimensions. Two examples are serial section transmission electron microscopy and low frame rate video data.

The key to anisotropic data analysis is to employ dependencies between the images/frames of the stack to resolve ambiguities. To find dependent regions we use registration techniques such as optical flow. We developed two novel approaches for data enhancement and neuronal segmentation.

- "SuperSlicing Frame Restoration for Anisotropic ssTEM" D. Laptev, A. Vezhnevets, J.M. Buhmann, ISBI 2014. Paper, poster. Extended to video in NCW ECML paper and presentation.

- "Anisotropic ssTEM Image Segmentation Using Dense Correspondence Across Sections" D. Laptev, A. Vezhnevets, S. Dwivedi, J.M. Buhmann, MICCAI 2012. Paper, poster and review paper.

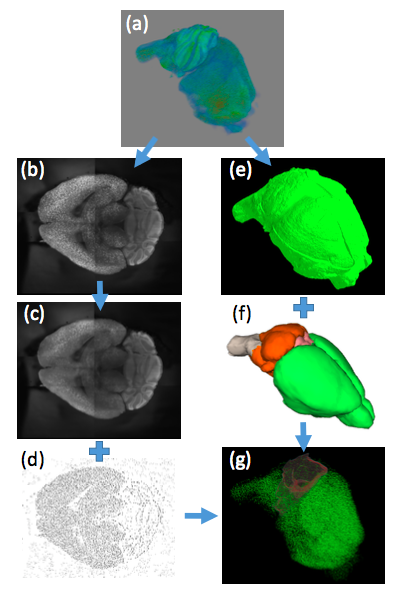

Biologically-motivated priors

We introduce a framework to automatically tune hyper-parameters of machine learning algorithms by employing external global statistics on the structures of interest. We focus on medical imaging problems, where these statistics naturally come from previous biological knowledge.

As an example, we develop a first whole-brain algorithm for fully automated amyloid plaques detection and analysis in cleared mouse brains. The algorithm allows to significantly reduce human envolvement, providing less subjective and more biologically-plausible results. And hopefully bringing humanity one step closer to developing a cure to Alzheimer's disease.

- Two papers in preparation. Feel free to contact me if you are interested to learn more.

Cryptocurrency algotrading

A for-fun and for-experience project. I played around with various simple trading strategies (arbitrage, trend following, market making), with multiple exchanges' APIs (Kraken, BTC-e, Bittrex and no longer existing Cryptsy) and with python asynchornous programming. In case you are wondering, it was slightly profitable for some time when market volatility was very high and competition was low, and afterwards it was not.

I also have a channel in telegram, where I share some thoughts and news about crypto (in Russian): https://t.me/cryptohodl.

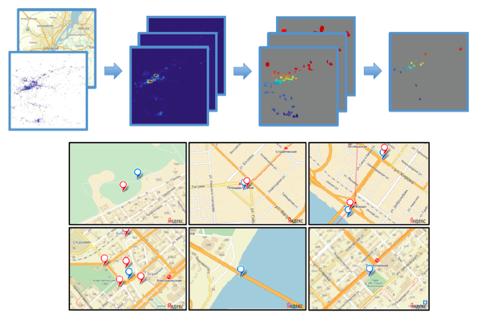

Mining areas of interest

The idea of the project was to develop a universal tuning-insensitive algorithm for mining attractive areas from the geo-tagged photos uploaded to social networks. The goal was to provide not only points, but the whole regions that can interest tourists. The project was developed for Yandex.

- "Parameter-Free Discovery and Recommendation of Areas-of-Interest" D. Laptev, A. Tikhonov, P. Serdyukov, G. Gusev, SigSpatial 2014. Paper, presentation, patent application.

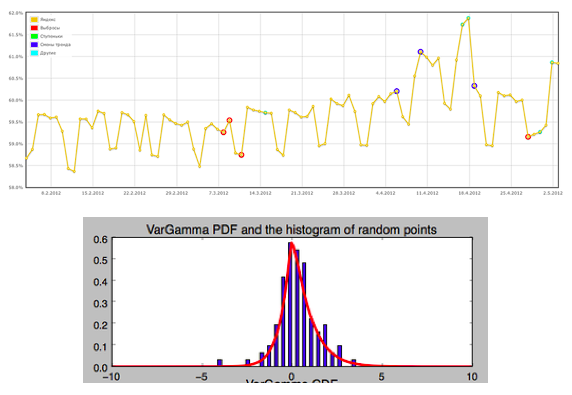

Time-series anomaly detection

The goal was to develop a system that would detect, analyse and classify abnomal behavior (outliers, changes of trend) in the Internet markets. Time-series analysis algorithm involved independent analysis of trend, seasonal component, and various noise models. The project was developed for Yandex.

As a side effect of this project, I also developed a small module for Variance Gamma (VarGamma) distribution modelling. See github.

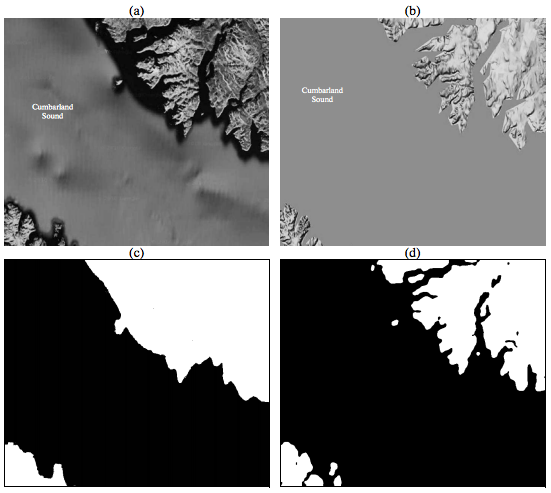

Solar activity forecast

As a part of my diploma thesis I was working on short term solar activity prediction from magnetogram images. The main parts of the pipeline were detecting active regions in the image, segmenting them, and computing features from the segmented areas. The project was done in collaboration with Russian Space Research Institute and Microsoft Research Cambridge.

- "Short-term solar flare forecast" V. Chernyshov, D. Laptev, D. Vetrov, Graphicon 2011. Paper, poster.

- "Searching for informative features in Magnetogram Solar images" D. Laptev, Diploma thesis, Lomonosov Moscow State University 2011. Text (in Russian).

- "Searching for active regions in the image" D. Laptev, Lomonosov 2011. Abstract (in Russian).

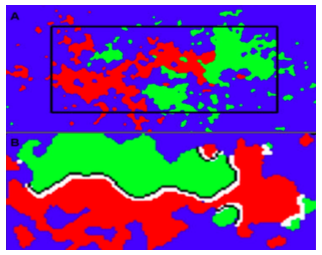

Global priors for segmentation

We developed two techniques that allow to solve signal or image segmentation problems with incorporated global constraints, such as the distribution of signal states, or the number of pixels from each segment. Subjects learned: HMM, MRF, EM-algorithm, dual decomposition, variational approximation and combinatorial optimization.

- "Signal Segmentation with Label Frequency Constraints using Dual Decomposition Approach for Hidden Markov Models" D. Kropotov, D. Laptev, A. Osokin, D. Vetrov, Intellectualization of Information Processing 2010. Paper.

- "Variational Segmentation Algorithms with Label Frequency Constraints" D. Kropotov, D. Laptev, A. Osokin, D. Vetrov, PRIA 2010. Paper.

Other stuff

- I participate in Startup Stammtisch - a regular consulting event for startups organized by ImpactHub and Google.

- As a relaxed outdoor activity, I have collected a rather detailed map of Zurich Street Art scene.

- To learn more about Google cloud platform and telegram bot API, I coded up a small Vzhuh (вжух) meme bot. The bot and the code.

- NeuroPutin. A fun speech recognition and audio processing project for automated clip generation.

- For some time I was playing with different techniques for Bayesian networks learning (causal inference) over large-scale data.

- I was teaching Computational Intelligence Lab and Introduction to Machine Learning in ETH Zurich, and supervised multiple master projects.

- My very first research project was to help biologists to count cells (to develop an automated method for tracking cell division rate).

- I enjoy casual mobile and web development. I coded multiple toy puzzle games, educational applications and a couple of commercial projects.